Link to Part 1:- Google File System Summarised Part 1

Link to Part 2:- Google File System Summarised Part 2

System Interactions

- Mutations obviously have to be performed on the all the replicas. Say a client wants to mutate a file. Amongst the many replicas avai lable, the master grants a lease (think of it as permission to be modified). They call this particular replica the Primary.

- The lease (permission) times out in 60 seconds. It however keeps getting extended as long as a client is mutating it.

- The primary decides the order of mutations that are to be applied. All the other replicas follow it.

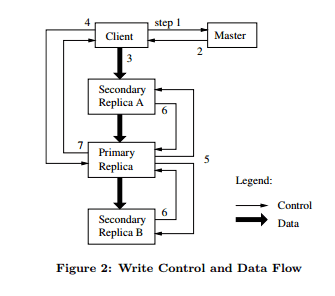

- Control and data flow are decoupled. All the replicas follow the primary for the order of mutation. Data is stored in each chunkserver’s buffer and used when the time comes. Process of data transfer is explained below.

- The client gets the location of the primary and all the replicas. It caches this information to reduce the involvement of the master. - Data is passed to all the replicas. A scheme that exploits network topology is used rather than what you’d normally expect (i.e passing to the primary and then to the replicas). Also each server uses it’s full network bandwidth to pass data to the closest replica rather than split it’s capacity by passing to multiple servers simultaneously. Say the client wants to push to 4 chunkservers (S1 to S4). The closest server to the client is S1. The client uses all it’s bandwidth to pass data to S1. Closest to S1 is S2. S1 uses all it’s capacity rather than splitting transfer rate between S2 and S3. They also minimise delays by pipelining the transfer over TCP. Also a chunkserver forwards as it keeps receiving (it does not wait for the entire data) to further minimise delays. They have a setup which enables them to measure distances between chunkservers using IP Addresses.

- When all the replicas have received the data, the client then sends a write request to the primary. It gives a serial ID to every mutation (possibly from multiple clients). Also remember that it already has the data in it’s internal buffer. The primary forwards the requests to all the replicas (the primary has executed all the mutation requests by now). They mimic the order followed by the primary.

- The primary replies to the client. Errors encountered at the replicas are reported to the client. The mutation will not fail at the primary as it only forwards requests after it’s done executing them. The client retries a few times. The details like does it retry only the failed chunks or all the chunks again etc aren’t delved into in the paper.

- Atomic Append Records (appending at least once at the end of a file if you recall from the last part) follows a very similar process. However unlike a new file it cannot be written at an offset which the client chooses. The primary replica chooses the offset at which the file should be appended (if many clients write to the same file the offsets are more prone to clash and create undefined regions). There’s an additional check that happens. If appending the new data causes the chunk to exceed 64 MB, it pads the current chunk to take 64 MB and retries the append in the next chunk. Append sizes cannot exceed 1/4th the size of the file to keep such fragmentation in control. GFS only guarantees that data will be written at least once but each replica of a chunk might not be identicaly byte by byte (I’m a little fuzzy about this but I think differences are caused by different amounts of padding used or data being appended more than once on particular chunkservers).

Snapshots

- Copies a file or directory almost instantaneously.

- Standard copy-on-write techniques used. Read more about it on Wikipedia.

- Used while creating copies or as a precautionary measure while creating checkpoints.

- All outstanding leases are revoked. It prevents mutations to the file(s) during the process.

- The newly created snapshot points to the same chunks as the source file (remember copy-on-write? To save resources a reference to the particular chunk is used rather than creating a duplicate copy). Actual copies or deep copies are only made when clients make a request to modify the chunks.

- On the first write operation to that chunk post the snapshot, the master notices that it has a reference count greater than one. Reference count is used to keep track of the number of shadow copies or references(as explained in the previous point) that exist of the particular chunk. Since it’s a mutation, the references should now be turned into copies as the original probably should not be touched (they are part of a snapshot if you recall). The master tries to optimise this process as well. Instead of sending the chunk over the network and creating copies across chunkservers, it requests chunkservers that already have that particular chunk stored locally to create another copy. It grants a lease to one of these chunkservers. After all this is done it sends the client information about the primary.

Master Operations

Namespace Management and Locking

- Master needs to acquire locks for some of it’s operations. Example while taking a snapshot.

- Efficient locking mechanism over directory and files so that many concurrent operations can be performed.

- The master does not have a per directory data strucuture that lists the content of that directory. It just maps fullpathnames to metadata (prefixes are compressed to reduce RAM usage by metadata).

- So

/home/user/data.datis actually a key with some metadata as value. There are no directories as such in GFS. The metdata from the key/home/user/data.datpoints to relevant locations. Reading these locations enables the client to get the file. - Each opeartion requires various locks. For example

/d1/d2.../dn/leaf(leaf indicates the final directory or file it is performing an operation on) it will acquire read locks on/d1,/d1/d2…/d1/d2.../dnand a writers locks on/d1/d2.../dn/leaf. Getting a writers lock allows writing to/d1..../dn/leafand a reader’s lock on the remaining directories prevent/d1,/d1/d2etc from being deleted or renamed. To my understanding directory/d1indicates all keys (fullpath file names) which start with/d1. Given full paths to files, if two filepaths start with/d1they belong to the same directory. - They give an example of the locking system prevents a file being created in a directory which is being snapshotted. I’m not sure if my understanding is correct, so it’d be better if I skip it. I couldn’t find any other link online which explained this particular section in depth.

- Multiple file creations can happen in the same directory as well. Both the writers acquire a read lock on the directory (the directory is protected from being renamed, deleted or snapshotted) and write locks on the files being written (this serialises attempts to create a file with the same name).

- Read-write lock objects are lazily allocated and deleted. They are acquired by level in the namespace tree and then lexicographically within the same level. Doing this (shallow to deep) prevents deadlocks.

Replica Placement

- Physical placement of servers across racks.

- Make sure replicas exist in different racks to maximise data availability and integrity. Say an entire rack is down due to an outage clients can still read and write.

- They explain that bandwidth in and out of a rack maybe less than the sum of the bandwidths of individual machines. Hence placing it in various racks can help clients exploit reads from various racks. In the case of mutations where the client has to send data, multiple racks actually are disadvantageous as data has to travel longer distances. It’s a tradeoff that they are quite satisfied about.

Creation, Re-Replication, Rebalancing

Creation and Re-Replication

The goals of a master are to place replicas on servers with less than average disk utilisation, spread replicas across racks and reduce the number of recent “creation or writes” to each server (even though writes are cheap they are accessed by many clients simultaneously even while being written (recall checkpointing by writers and readers) which might create additional load).

Chunks need to be re-replicated if the number of copies fall (data corruption on a server or a replica is unavailable) below the threshold (normally it’s set to 3 but it’s configurable as mentioned earlier). Instead of re-replicating all of them at once the master prioritises re-replication to prevent these cloning operations from becoming bottlenecks. Restrictions are placed on the bandwidth of each server for re-replication so that client requests aren’t compromised.

How are Re-replications Prioritised?

- A chunk which is two short of desired replicas is given precedence over a chunk which is short by one.

- Live files rather than recently deleted files (discussed in the Garbage Collection section). Files that are marked as deleted are just renamed temporarily and deleted only after a few days. So replicas of them can exist for a few days as well.

Rebalancing

Master also rebalances regularly to achieve the above said goals. It may move replicas form one rack to another, try to bring disk usage in a server closer to the average and so on. Any new chunkserver is filled up gradually by the master rather than flood it with loads of write operations.

Garbage Collection

Any file deletion is logged immediately but the resource isn’t deleted immediately. It is renamed to some hidden folder. The master regularly scans the filesystem and deletes such files if they are older than 3 days. It also deletes metadata of chunks that have no files (it can check its file to chunk mapping and find out chunkservers that are not used) or only contains deleted files. Also during exchange of HeartBeat messages the master can instruct other chunkservers to delete replicas of the type of chunks described above.

Advantages Over Eager Deletion

- Bookkeeping is reduced. Master does not keep track of messages lost (and hence keep retrying) in an environment where component failures are common.

- Done in batches when load is less.

- Tagged along with regular activities like namespace scanning.

- Safety net against accidental deletions.

Disadvantages

- Storage isn’t available immediately. Poses problems when storage space is scarce. (It is possible to reduce the period to trigger deletion from 3 days to a lower value)

- Applications that frequently create and delete files might put the system under stress (storage is used immediately but freeing can take 3 or so days). To overcome all this the clients can specify directories that are to be stored without replication. They can also specify directories where deletion takes place immediately.

Stale Replication Detection

- The master increments the chunk version everytime it grants a lease. After the replicas of this chunk make mutations they are updated to the current version as well.

- If the replica isn’t available during mutation it would miss the mutation and hence would show an outdated version number. During regular garbage collection the master can take care of such replicas.

- Additionally the master sends the version number to a client while it requests a mutation and also while a chunkserver clones another chunkserver.

I haven’t covered Section 5 (Fault Tolerance and Diagnosis). Mostly because the post is already pretty long and also I’m lazy. Moreover Section 5 is only around a page long and can easily be grokked provided you have a reasonable idea of what I covered in these 3 parts.